Azure Project Condenser

For a long time, I have been a strong believer in always viewing video in the highest possible quality. In the year 2021, to me this means 4k at 60 frames per second and a high bitrate for personal/family videos or 4k at 24 or 30 frames as a reasonable compromise. After years of watching films in 'DVD' quality (ie 576i, 576p, 480p and 480i) and looking at even lower bitrate pre-smartphone 480p heavily compressed phone based videos with noticeable colour artefacts in those years past, the jumps to high bitrate 1080p and then to 4k was something I appreciated so much that it's always been difficult for me to do anything lower than this(perhaps my glasses increase my sensitivity to low quality video!!). But where 4k video is concerned, it is important to also remember that an optimum viewing display (a 4k display) and a capable decoder (a separate playback device to show the video on the display) or a 4k TV with playback support through USB, LAN or WIFI is not always available to the end user.

In a lot of cases, there is more capability available now to create or record 4k video with modern smartphones that people carry, but there is lesser capacity to properly playback and view 4k video without having to use common solutions that most people use such as Cast, Miracast solutions or phone screen mirroring which heavily compresses the stream or to use a capable 4k OTT set top box but with a 1080p screen which underserves 4k video to begin with (this is somewhat akin to using high-octane, high performance fuel for a vehicle that is limited to 50mph!). Cast solutions often suffer compatibility issues with the TV, stream compression and dropped signals.

With precious videos living more on the phone using a relatively large amount of disk space, and with most people only sticking to the free but limited cloud storage with services like Google Photos, OneDrive or Apple iCloud, there is increased risk to losing this data when it lives on a very mobile device that can get stolen, get cracked/broken, burnt or fall into a pool for more than 30mins.

The middle ground around this might be an approach that needs a longer set of pre-requisites and upfront payment but would work longer term in terms of keeping recorded videos preserved in colder storage such as a HDD and maintaining the line to the best possible viewing experience in the home whilst working with the existing older 1080p displays or media playback devices that people would commonly have by condensing the recorded 4k video to 1080p. Such an architecture could be constructed of the following:

- Android camera application that lives on the user's phone that has access to 4G/5G or Wifi

- Services running in the Azure Cloud (Azure Functions, Azure Media Services, Azure Blob Storage, Azure Storage Queue)

- A small mini-PC running Windows (or any PC really) and with the NET5 runtime installed, this can run headless after setup

- A .NET5 console application installed as a Windows service running on the mini-PC

The Camera Application

To start the process, we require a new camera application to be installed on the smartphone to capture video. I used Android in this work, but the same principles can be used for iPhone since the Xamarin framework used below is cross-platform. The purpose of this application is to record videos in the highest quality and upload directly into a known Azure storage account as blobs (This is discussed in the section "The Services Running in the Cloud"). The app is needed as this connection to Azure directly is something the target phone's default camera won't be able to do. I used Visual Studio 2019 with the Azure Development and Mobile development with Xamarin Workloads added from the VS Installer to support development of Android and iPhone apps.

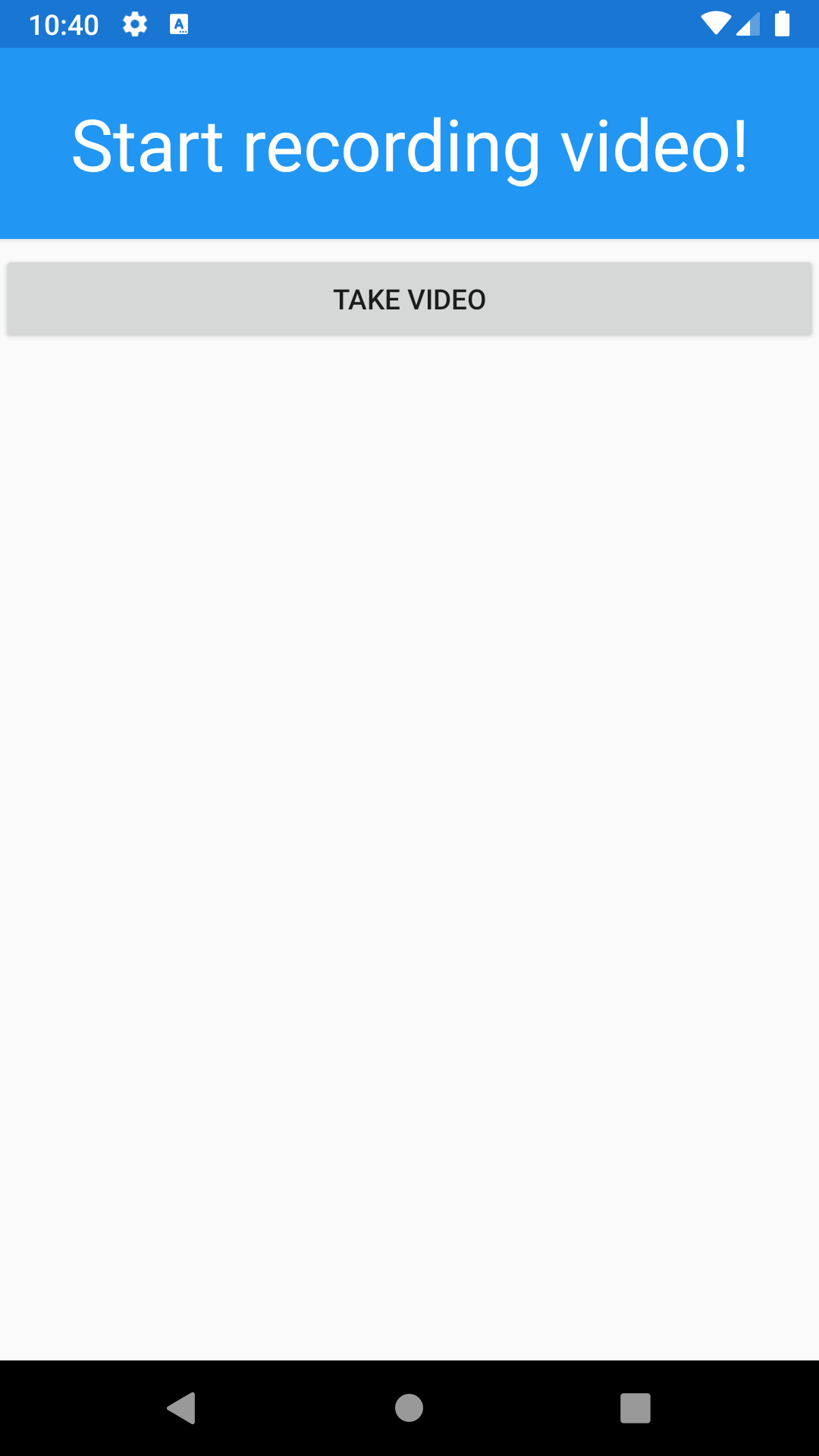

From the Xamarin Mobile App template (Xamarin.Forms) and with the Blank canvas chosen in Visual Studio, I set my Class Library MainPage.xaml to be the following:

<ContentPage xmlns="http://xamarin.com/schemas/2014/forms"

xmlns:x="http://schemas.microsoft.com/winfx/2009/xaml"

x:Class="newvideo.MainPage">

<StackLayout>

<Frame BackgroundColor="#2196F3" Padding="24" CornerRadius="0">

<Label Text="Start recording video!" HorizontalTextAlignment="Center" TextColor="White" FontSize="36"/>

</Frame>

<Button Text="Take Video" Clicked="Capture_Video" x:Name="videoButton" />

</StackLayout>

</ContentPage>This sets the UI to be basic as shown below:

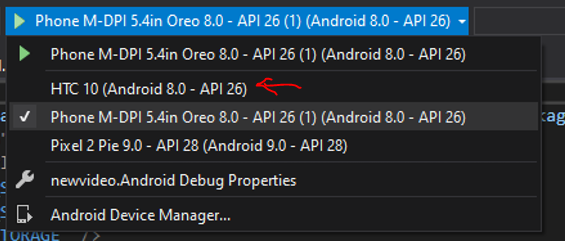

My target Android version here is 8.0 Oreo and the target phone (real phone not AVD) I used is an HTC 10 updated to 8.0 Oreo. In the AndroidManifest.xml, I set the permissions as follows to get access to the camera, storage and network:

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android" android:versionCode="1" android:versionName="1.0" package="com.companyname.newvideo" android:installLocation="auto">

<uses-sdk android:minSdkVersion="25" android:targetSdkVersion="26" />

<application android:label="newvideo.Android" android:theme="@style/MainTheme"></application>

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_INTERNAL_STORAGE" />

<uses-permission android:name="android.permission.WRITE_INTERNAL_STORAGE" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAPTURE_AUDIO_OUTPUT" />

</manifest>The 'Take Video' Button is wired to do the following in the code-behind in MainPage.xaml.cs:

using Android.Widget;

using Microsoft.Azure.KeyVault;

using Microsoft.IdentityModel.Clients.ActiveDirectory;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Blob;

using System;

using System.IO;

using System.Threading.Tasks;

using Xamarin.Essentials;

using Xamarin.Forms;

using ClientCredential = Microsoft.IdentityModel.Clients.ActiveDirectory.ClientCredential;

namespace newvideo

{

public partial class MainPage : ContentPage

{

public MainPage()

{

InitializeComponent();

}

private int uploadCompleteCount { get; set; }

private int recordedCount { get; set; }

private async void Capture_Video(object sender, EventArgs e)

{

var result = await MediaPicker.CaptureVideoAsync(new MediaPickerOptions { Title = "cloudvideo" });

if(result != null)

{

recordedCount++;

var context = Android.App.Application.Context;

var shortDuration = ToastLength.Short;

Toast.MakeText(context,"Uploading..", shortDuration).Show();

////Save the video to cloud directly from incoming stream and also locally to the Android Download folder

var localFilePath = Path.Combine("//storage//emulated//0//Download", result.FileName);

using (Stream cloudStream = await result.OpenReadAsync())

{

await SaveToBlobStorage(cloudStream);

using (FileStream localFileStream = File.OpenWrite(localFilePath))

{

cloudStream.Position = 0;

await cloudStream.CopyToAsync(localFileStream);

}

}

}

}

/////Place Additional Methods here

}

}Additional Methods:

private async Task SaveToBlobStorage(Stream stream)

{

var context = Android.App.Application.Context;

var longDuration = ToastLength.Long;

string storeAccount = await AccessKeyVault("[YOUR_CONNECTIONSTRING_SECRET_NAME]");

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(storeAccount);

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobClient.GetContainerReference("videodata");

CloudBlockBlob blockBlob = container.GetBlockBlobReference("Video" + DateTime.Now.ToString("yyyyMMddHHmmss") + ".mp4");

await container.CreateIfNotExistsAsync();

await blockBlob.UploadFromStreamAsync(stream);

uploadCompleteCount++;

Toast.MakeText(context, uploadCompleteCount + " of " + recordedCount + " Uploaded", longDuration).Show();

}public static async Task<string> AccessKeyVault(string secretName)

{

string clientId = "[YOUR_SERVICE_PRINCIPAL_CLIENTID]";

string clientSecret = "[YOUR_SERVICE_PRINCIPAL_CLIENTSECRET]";

KeyVaultClient kvClient = new KeyVaultClient(async (authority, resource, scope) =>

{

var adCredential = new ClientCredential(clientId, clientSecret);

var authenticationContext = new AuthenticationContext(authority, null);

return (await authenticationContext.AcquireTokenAsync(resource, adCredential)).AccessToken;

});

var keyvaultSecret = await kvClient.GetSecretAsync("https://[YOUR_KEYVAULT_NAME].vault.azure.net", secretName);

return keyvaultSecret.Value;

}To install the app onto a real phone, Enable USB debugging on the phone in the Developer options first at Settings, Developer Options, toggle USB debugging On. Then connect the phone to the development PC via USB. In Visual Studio, the phone should now appear as a target device to deploy the app to. Select your phone from the list and run it from there (doing this once will install the apk to the phone and it will remain on the phone even after disconnecting the usb cable):

When running the newly installed camera app on the phone for the first time and not connected to the PC, you may be asked to allow access to storage and camera, and Google Play Protect may prompt if you really wish to run the application. When you click Take Video, record and accept a new video it will automatically get sent to a "videodata" folder in Azure.

The Services running in the Cloud

In Azure, there is use of:

- A single Azure Function App (I called mine EncoderCore) on the Consumption Tier, containing 2 Functions (I called mine FileLoader and EnqueueMessage), plus additional helper methods

- The same Azure Storage account used in the Android app, with a blob container called "videodata"

- Azure Storage Queue called "videooutputnames" created in the same storage account as the "videodata" container/folder where videos get sent to first

- Azure Media Services instance called "cloudencoder" created in the same storage account as the "videodata" container/folder where videos get sent to first

When we take a new video as shown above from the phone app section, there is an Azure Blob Storage container (a folder) that's created if it didn't exist already called "videodata" (you can change this to a name that suits). This "videodata" container is created in the Storage Account determined from the connectionstring parsed during SaveToBlobStorage in the Android app. To handle this upload event in the cloud, I created an Azure Function called FileLoader that is triggered by a blob upload in the "videodata" container as part of my EncoderCore Function App. The FileLoader function kicks off an encoding process using the blob that has just been uploaded:

using System;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.EventGrid.Models;

using Microsoft.Azure.WebJobs.Extensions.EventGrid;

using Microsoft.Extensions.Logging;

using System.Threading.Tasks;

using Azure.Storage.Blobs;

using Microsoft.Azure.Management.Media;

using Microsoft.Azure.Management.Media.Models;

using Microsoft.Extensions.Configuration;

using Microsoft.Rest;

using Microsoft.IdentityModel.Clients.ActiveDirectory;

using Microsoft.Rest.Azure.Authentication;

using Azure.Storage.Queues;

using System.IO;

using Newtonsoft.Json;

using System.Text;

namespace EncoderCore {

public static class EncoderCore

{

[FunctionName("FileLoader")]

public static async void FileLoader([BlobTrigger("videodata/{name}", Connection = "AzureWebJobsStorage")] Stream content, string name, ILogger log, [Blob("videodata/{name}", FileAccess.Write,Connection = "AzureWebJobsStorage")] BlobClient blobClient)

{ //accept common video file extensions and container formats like mkv

if (name.ToLower().Contains(".mp4") || name.ToLower().Contains(".mkv") || name.ToLower().Contains(".mov") || name.ToLower().Contains(".webm"))

{

await BeginEncode(log, name, blobClient);

}

}

private static async Task BeginEncode(ILogger log, string name, BlobClient blobClient)

{

var mediaServicesClient = await CreateMediaServicesClientAsync();

//set encoding settings here, known as a Transform in Media Service terminology

await GetOrCreateTransformAsync(mediaServicesClient, "[YOUR_AZURE_RESOURCE_GROUP]", "[YOUR_MEDIA_SERVICES_NAME]", "testTransform");

log.LogInformation("transform created/found");

//a seedAsset for setting a custom container/folder name

Asset seedAsset = new Asset();

seedAsset.Container = SanitiseString(name.ToLower()) + "outputcontainer";

//create the outputAsset using a seedAsset with a custom folder name and randomised outputAsset name

Asset outputAsset = await mediaServicesClient.Assets.CreateOrUpdateAsync( "[YOUR_AZURE_RESOURCE_GROUP]", "[YOUR_MEDIA_SERVICES_NAME]", "myoutputasset" + $"-{Guid.NewGuid():N}", seedAsset);

log.LogInformation("output container created");

//use a blobClient on the newly uploaded video in the videodata folder to obtain a SAS Url with read permissions

var blobSASURL = blobClient.GenerateSasUri(Azure.Storage.Sas.BlobSasPermissions.Read, DateTime.UtcNow.AddHours(1).ToUniversalTime());

log.LogInformation("sas url ok");

//use the SAS url to get the absoluteUri for the new video uploaded, and use that as the 'input asset' for the Azure Media Services Instance. Using a Stream object will create another blob

JobInputHttp jobInput = new JobInputHttp(files: new[] { blobSASURL.AbsoluteUri });

//create the job output from the outputAsset

JobOutput[] jobOutputs = { new JobOutputAsset(outputAsset.Name) };

//start encoding in the cloud!!

await mediaServicesClient.Jobs.CreateAsync(

"[YOUR_AZURE_RESOURCE_GROUP]",

"[YOUR_MEDIA_SERVICES_NAME]",

"testTransform",

"job1"+ $"-{Guid.NewGuid():N}",

new Job

{

Input = jobInput,

Outputs = jobOutputs,

});

log.LogInformation("job created");

}

////Place Additional Methods here and EnqueuMessage Function Code

}

}**Note that even with the Stream object available in the Function, I chose not to use this Stream to create the Azure Media Services Input Asset because using a Stream or byteArryay means that Azure Media Services would create a separate blob of its own in storage for the already existing blob. This would end up duplicating blobs and doubling storage costs, so rather the better way to create the Input Asset for AMS is to use the AsbsoluteUri (as shown above) of the already existing blob.

Additional Methods:

//get credentials for using with AMS

private static async Task<IAzureMediaServicesClient> CreateMediaServicesClientAsync()

{

var credentials = await GetCredentialsAsync();

return new AzureMediaServicesClient(credentials)

{

SubscriptionId = "[YOUR_AZURE_SUBSCRIPTION_ID]",

};

}

//the domain can be found from the AMS instance properties

private static async Task<ServiceClientCredentials> GetCredentialsAsync()

{

ClientCredential clientCredential = new ClientCredential("[YOUR_SERVICE_PRINCIPAL_CLIENTID]", "[YOUR_SERVICE_PRINCIPAL_CLIENTSECRET]");

return await ApplicationTokenProvider.LoginSilentAsync("[YOUR_DOMAIN]", clientCredential, ActiveDirectoryServiceSettings.Azure);

}private static async Task<Transform> GetOrCreateTransformAsync(

IAzureMediaServicesClient client,

string resourceGroupName,

string accountName,

string transformName)

{

// reuse existing transform if it exists already

Transform transform = await client.Transforms.GetAsync(resourceGroupName, accountName, transformName);

if (transform == null)

{

// Settings

TransformOutput[] output = new TransformOutput[]

{

new TransformOutput

{

Preset = new BuiltInStandardEncoderPreset()

{

// 1080p h264 output.

PresetName = EncoderNamedPreset.H264SingleBitrate1080p,

}

}

};

transform = await client.Transforms.CreateOrUpdateAsync(resourceGroupName, accountName, transformName, output);

}

return transform;

}//sanitising string to have a well formatted output container name

public static string SanitiseString(string fileName)

{

StringBuilder sb = new StringBuilder(fileName);

sb.Replace(".mp4", "");

sb.Replace(".mkv", "");

sb.Replace(".webm", "");

sb.Replace(".mov", "");

sb.Replace("-", "");

sb.Replace(".", "");

sb.Replace(" ", "");

return sb.ToString().ToLower();

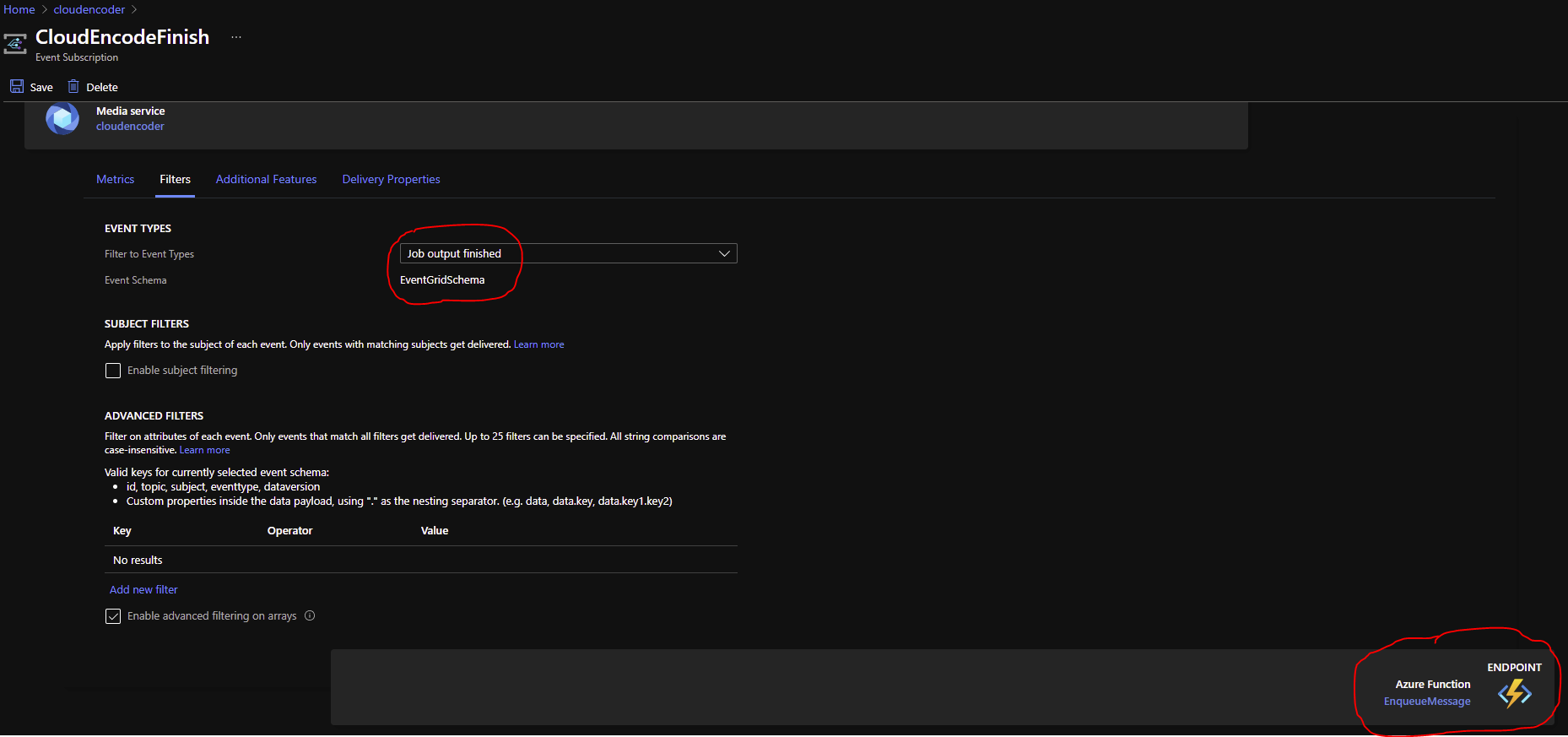

}In the Azure Portal I set an Azure Event that gets sent whenever an encode of a video finishes from the Azure Media Services instance. On the AMS instance I have called "cloudencoder", the Event is filtered on the 'Job output finished' event type only and then the event is sent to a Function called EnqueueMessage that is triggered by an EventGridEvent. In the portal the Event Subscription would look like this:

The EnqueueMessage Function below also lives in the EncoderCore Function App. Its job is to wait for events indicating a finished encode from the Azure Media Services Instance, and then the Function adds the encoded video output folder name to a Storage Queue called "videooutputnames" for later use(discussed in next section). This encoded video output folder name is calculated by first extracting the name of the actual resultant encoded video name (assetName) from the JSON data payload that the Azure Media Service instance passes to the function as the EventGridEvent when it finishes an encode. Then with this resultant video name, we can query a MediaServicesClient (amsClient) to find out where that newly created video lives and get its Container or folder name. This folder name is added to the storage queue as the return value onto the Storage Queue:

//Additional code belonging in the EncoderCore Function App

//Root and Output are used here for deserialising the payload passed in from AMS, where we just need to get the "assetName" property

public class Output

{

public string assetName { get; set; }

}

public class Root

{

public Output output { get; set; }

}

[FunctionName("EnqueueMessage")]

[return: Queue("videooutputnames", Connection = "AzureWebJobsStorage")]

public static async Task EnqueueMessage([EventGridTrigger] EventGridEvent eventGridEvent, ILogger log)

{

var mediaServicesClient = await CreateMediaServicesClientAsync();

//extract the assetname from the event grid Event

var assetname = JsonConvert.DeserializeObject<Root>(eventGridEvent.Data.ToString()).output.assetName;

Asset createdAsset = await mediaServicesClient.Assets.GetAsync("AzureLearn", "cloudencoder", assetname);

log.LogInformation("Enqueue Message ok");

return createdAsset.Container; //this return value is automatically written to the queue

}For Reference, the Function App's project references are as follows (These particular versions make sure that bindings to other Azure resources on the Functions work correctly too):

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<TargetFramework>netcoreapp3.1</TargetFramework>

<AzureFunctionsVersion>v3</AzureFunctionsVersion>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="Azure.Storage.Blobs" Version="12.10.0" />

<PackageReference Include="Azure.Storage.Queues" Version="12.8.0" />

<PackageReference Include="Microsoft.AspNetCore.AzureKeyVault.HostingStartup" Version="2.0.4" />

<PackageReference Include="Microsoft.Azure.Management.Media" Version="4.0.0" />

<PackageReference Include="Microsoft.Azure.Functions.Extensions" Version="1.1.0" />

<PackageReference Include="Microsoft.Azure.WebJobs.Extensions.EventGrid" Version="2.1.0" />

<PackageReference Include="Microsoft.Azure.WebJobs.Extensions.Storage" Version="5.0.0" />

<PackageReference Include="Microsoft.NET.Sdk.Functions" Version="3.0.11" />

<PackageReference Include="Microsoft.Extensions.DependencyInjection" Version="6.0.0" />

<PackageReference Include="Microsoft.Rest.ClientRuntime.Azure.Authentication" Version="2.4.1" />

<PackageReference Include="Microsoft.WindowsAzure.Management.MediaServices" Version="4.1.0" />

</ItemGroup>

<ItemGroup>

<None Update="host.json">

<CopyToOutputDirectory>PreserveNewest</CopyToOutputDirectory>

</None>

<None Update="local.settings.json">

<CopyToOutputDirectory>PreserveNewest</CopyToOutputDirectory>

<CopyToPublishDirectory>Never</CopyToPublishDirectory>

</None>

</ItemGroup>

</Project>The mini PC

In order to have a way to download the output encoded videos from the cloud and store them long term locally, I decided to use a small windows mini PC I have with access to large capacity disks, internal and external. (These small machines can be found online for around £89 to £149). A mini PC also offers reliable thermal performance over time generally, is low-power and is multi-purpose beyond the duty of streaming video. For serving/streaming videos from internal or external storage around to other devices on the same network in the house, my choice is to go with a DLNA (Digital Living Networking Alliance) server service like Serviio (my choice) which is free or Plex that runs on the PC. The DLNA service watches a target folder for media, and makes that media available to other devices like TVs, tablets, phones, set top box on the same network running streaming client software like VLC (most TV's will have built in software that is DLNA compliant and not require add-ons like VLC).

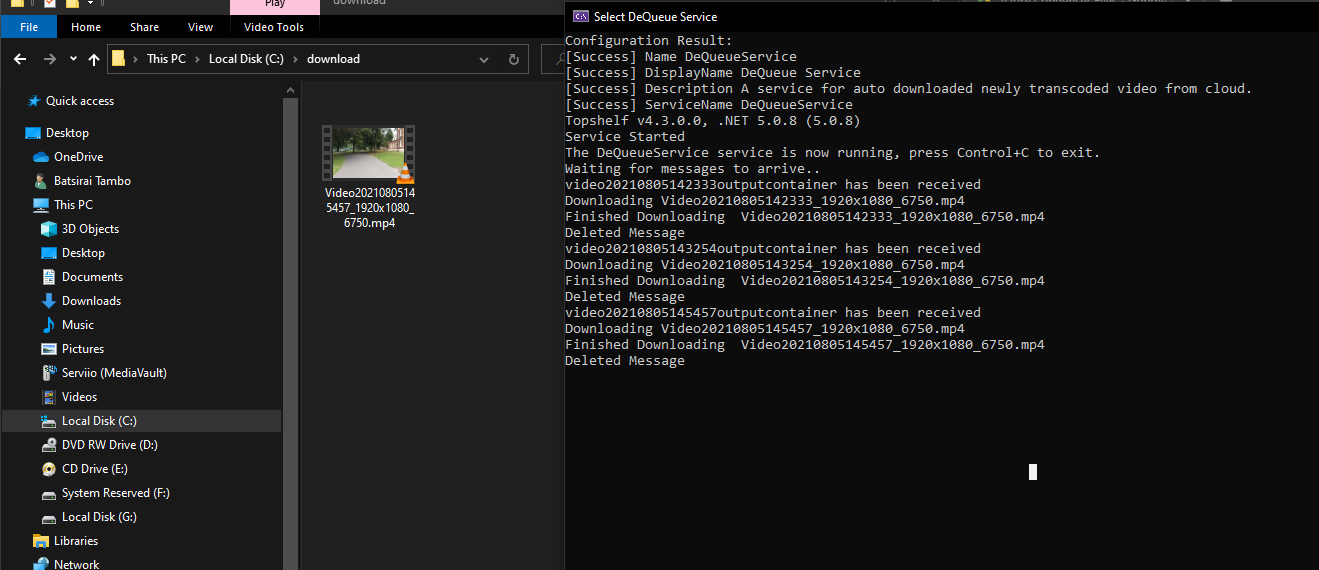

The Windows Service running on the mini PC

With a mini-PC configured with DLNA software like Serviio, it is then possible to have a Windows Service running on the PC to automatically deposit videos downloaded from the Cloud into the target watch folder for without having to manually do it ourselves.

The Windows Service is set as a NET5 console application that uses Topshelf. The service will listen for messages arriving on the "videooutputnames" Queue, and then using these messages as pointers to go to specific output containers that get created by the Azure Media Services whenever it finishes an encode. From the output container, the service will get the largest file (which would always be the output 1080p file) and download it to local disk. (The other smaller files in the container are artefacts created by the Azure Media Services Instance to support streaming capabilities not covered in this post)

using Azure.Storage.Queues;

using Azure.Storage.Queues.Models;

using System.Configuration;

using System;

using System.Linq;

using System.Timers;

using Topshelf;

using System.Threading.Tasks;

using System.IO;

using Azure.Storage.Blobs;

using log4net;

using log4net.Config;

namespace DeQueue

{

public class AutoDequeueService

{

private static ILog log;

private Timer _timer;

private static void Main(string[] args)

{

HostFactory.Run(x =>

{

x.Service<AutoDequeueService>(s=> {

s.ConstructUsing(program => new AutoDequeueService());

s.WhenStarted(program => program.Start());

s.WhenStopped(program => program.Stop());

});

x.EnableServiceRecovery(r => r.RestartService(TimeSpan.FromSeconds(20)));

x.SetServiceName("DeQueueService");

x.SetDisplayName("DeQueue Service");

x.SetDescription("A service for auto downloaded newly transcoded video from the cloud.");

x.RunAsLocalSystem();

x.StartManually();

});

}

public AutoDequeueService()

{

_timer = new Timer(3000) { AutoReset = true };

_timer.Elapsed += (sender, eventArgs) => AwaitMessages();

}

public void Start() {

string path = Directory.GetCurrentDirectory();

//get log4net configuration from log4net.xml(shown below) when using NET5/NetCore

XmlConfigurator.Configure(new FileInfo(Path.Combine(path, "log4net.xml")));

log = LogManager.GetLogger("FileLogger");

_timer.Start();

log.Info("Service Started");

}

public void Stop() { _timer.Stop(); }

////Place Additional Methods here

}

}<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<appSettings>

<add key ="StorageConnectionString" value="[YOUR_STORAGEACCOUNT_CONNECTIONSTRING]"/>

<add key ="QueueName" value="videooutputnames"/>

</appSettings>

</configuration><?xml version="1.0" encoding="utf-8" ?>

<log4net>

<appender name="console" type="log4net.Appender.ConsoleAppender">

<layout type="log4net.Layout.PatternLayout">

</layout>

</appender>

<appender name="FileLogger" type="log4net.Appender.RollingFileAppender">

<file value="C:/Logs/logfile.txt" />

<appendToFile value="true" />

<rollingStyle value="Date" />

<datePattern value="yyyyMMdd-HHmm" />

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%date %level %message%newline" />

</layout>

</appender>

<root>

<level value="ALL" />

<appender-ref ref="console" />

<appender-ref ref="FileLogger" />

</root>

</log4net>To listen for messages on the queue (Additional Methods):

public void AwaitMessages()

{ //stop timer to stop continuously calling into Runner

_timer.Stop();

Runner().Wait();

}

public static async Task Runner()

{ //connect to to the queue with connectionString via queueClient

string connectionString = ConfigurationManager.AppSettings["StorageConnectionString"];

string videoOutputNamesQueue = ConfigurationManager.AppSettings["QueueName"];

QueueClientOptions queueClientOptions = new QueueClientOptions()

{

MessageEncoding = QueueMessageEncoding.Base64

};

QueueClient queueClient = new QueueClient(connectionString, videoOutputNamesQueue, queueClientOptions);

log.Info("Waiting for messages to arrive..");

while (true)

{ //get the message at the front of the queue

QueueMessage receivedMessage = await queueClient.ReceiveMessageAsync();

if (receivedMessage == null) continue;

log.Info(receivedMessage.MessageText + " has been received");

//Download

await Download(receivedMessage.MessageText);

//Delete queue message when done to get next message in queue

queueClient.DeleteMessage(receivedMessage.MessageId, receivedMessage.PopReceipt);

log.Info("Deleted Message");

}

}*Note- the 3 second delay before calling AwaitMessages() is crucial because it allows TopShelf to fully execute AutoDequeueService.Start() first, therefore fully starting the Windows Service and the calling AwaitMessages() 3 seconds after. If AwaitMessages() is called from AutoDequeueService.Start(), the Windows Service will be forever in a state of "Starting" and never fully start.

And finally, for saving the files to disk, the following is needed. The largest file in the newly created output folder will be the video:

public static async Task Download(string message)

{

try

{

string connectionString = ConfigurationManager.AppSettings["StorageConnectionString"];

var container = new BlobContainerClient(connectionString, message);

//get the largest file in the container and download it

var blobs = container.GetBlobs();

var datafile = blobs.OrderByDescending(x => x.Properties.ContentLength).FirstOrDefault();

BlobClient blobClient = container.GetBlobClient(datafile.Name);

//save to destination folder which will be the target DLNA folder

Stream filetarget = File.OpenWrite(@"C:\\download\\" + datafile.Name);

log.Info("Downloading " + datafile.Name);

await blobClient.DownloadToAsync(filetarget);

filetarget.Close();

log.Info("Finished Downloading " + datafile.Name);

}

catch (Exception ex)

{

log.Error(ex.Message);

}

}To install the console app as a Windows Service, build the solution and get the files from bin output folder and get them onto the target machine (the pc). With the NET5 runtime installed on the machine, navigate to the root folder containing the files from an Administrator-privileged Command window and use [NAME_OF_YOUR_EXE].exe install start . This will automatically start the service and it will now be waiting for new videos shot from the Android Camera app, to the Cloud, and to the PC!! The Service will now also be listed in the Windows Services list and can be stopped or started from here as an option.

Some Results!!

Uncompressed files here:

https://drive.google.com/file/d/1uqTZBb7ajLI-eKAlCrpgh9gtk9RQABks/view?usp=sharing (4k uncompressed)

https://drive.google.com/file/d/1VE9GVsdssOBs3KHB5o79VLoQYbkdj8x7/view?usp=sharing (1080p output from Azure)

Conclusions

The capability of being able to leverage the cloud in cases where a 4k is required to be downscaled to 1080p is useful as it means that that the phone does not need to do the heavy lifting of encoding on-device which would reduce battery life. Having an automated and less frictional way to create a pipeline that archives personal recorded videos in long term cold storage can mean that the user can have better piece of mind for the safe keeping of their video data. The user simply records a video, stops the video and the rest happens automatically. The idea is to make native videos shot on modern smartphone cameras, viewable on older playback hardware back at home (with the help of DLNA services) for the user since playback hardware is less often upgraded. Manual uploads to the "videodata" folder from the Portal ,Storage Explorer or CLI work too and will still trigger an encode in AMS and then trigger a download on the running Windows Service.

Some unknowns from this that came out is that the access to the entire camera software stack is somewhat limited from the Xamarin libraries available (perhaps .NET MAUI will change this). I found that when in the native video camera viewfinder, features such as slow-motion video was not available. I also still don't know if it's possible to retain HDR metadata in the Media Services output Asset (1080p HDR is possible) where the smartphone is recording 4k HDR.

This use case should still scale in the future where smartphone cameras record 8k videos(which are already available in the Samsung Galaxy S21 Ultra for example), and the cloud Transform can simply be updated to 'scale down' to 4k from 8k since most users are unlikely to have an 8k display and an 8k capable decoder at the time of this post.