Azure Media Services Tuning: Project Condenser Update

Off the back of Project Condenser earlier, I ended up having a few loose end questions that I thought I should put together here and test and resolve.

In the original conception of the cloud encode, the H.264 1080p cloud transform I used was designed to have an output video with a fixed video bitrate of 6.75Mbps and AAC audio at 128kbps. This works out ok, but considering that the HTC 10 I used recorded 4K video at average 45Mbps bitrate, it is significantly lower. The HTC 10 recorded lossless FLAC audio (which can be at a bitrate of 1Mbps to 1.5Mbps, just for audio!!).. and at a sampling rate 96kHz because the HTC 10 is one of a handful of smartphones that has a very high quality audio DAC!!. For comparison, Spotify Premium audio runs at 320kbps.

What does it all mean? It means that we can have an extremely high quality source material at the beginning, giving the flexibility to still have a high quality output for our end result, even when that output is at a lower 1080p resolution. With an extremely high quality source material, we might not need to necessarily accept the default bitrate 6.75Mbps set by the presets given in the Azure SDK. Rather, we can achieve a high quality 1080p output video, that would be a better quality than the original output we had by creating our own preset with bitrate settings of our choosing. This could mean that our new 1080p output would now be at 20Mbps instead, which is a closer bitrate to the original source's ~45Mbps, helping to keep more of the crispiness we get in the original 4K video!!

Back to the Transform

In order to increase the output video bitrate, we can step back into the output transform code in the Azure Function App in Project Condenser . We can change this to:

//firstly change all areas that reference the tranform name

//such as points where we fetch or create the new transform

await GetOrCreateTransformAsync(client, "[YOUR_RESOURCE_GROUP_NAME]",

"[YOUR_AMS_INSTANCE_NAME]", "[YOUR_NEW_TRANSFORM_NAME]");

//such as the block where the Job is created

await client.Jobs.CreateAsync(

"[YOUR_RESOURCE_GROUP_NAME]",

"[YOUR_AMS_INSTANCE_NAME]",

"[YOUR_NEW_TRANSFORM_NAME]",

"job1"+ $"-{Guid.NewGuid():N}", // jobname with randomiser

new Job

{

Input = jobInput,

Outputs = jobOutputs,

});Then...

//where the transformName is called, we will now use the new Transform's name as the transformName parameter here

private static async Task<Transform> GetOrCreateTransformAsync(

IAzureMediaServicesClient client,

string resourceGroupName,

string accountName,

string transformName)

{

//get the transofrm if it already exists

Transform transform = await client.Transforms.GetAsync(resourceGroupName, accountName, transformName);

if (transform == null)

{

TransformOutput[] output = new TransformOutput[]

{

new TransformOutput(

new StandardEncoderPreset(

codecs: new Codec[]

{

//copy original audio as bitstream

new CopyAudio(),

new H264Video (

// Default is 2 for H264 Layers according to docs

keyFrameInterval:TimeSpan.FromSeconds(2),

layers: new H264Layer[]

{

new H264Layer (

bitrate: 20000000, //set bitrate to 20Mbps

width: "1920",

height: "1080",

label: "HDUltra-20Mbps" // This label is used to modify the file name in the output formats

)

}

),

},

//Filename format customisation

formats: new Format[]

{

new Mp4Format(

filenamePattern:"Video-{Basename}-{Label}-{Bitrate}{Extension}"

)

}

),

onError: OnErrorType.StopProcessingJob,

relativePriority: Priority.High //prioritise encodes that use this Transform

)

};

// Create the Transform with the output defined above

transform = await client.Transforms.CreateOrUpdateAsync(resourceGroupName, accountName, transformName, output);

}

return transform;

}Going ALL IN, 4K60 everything

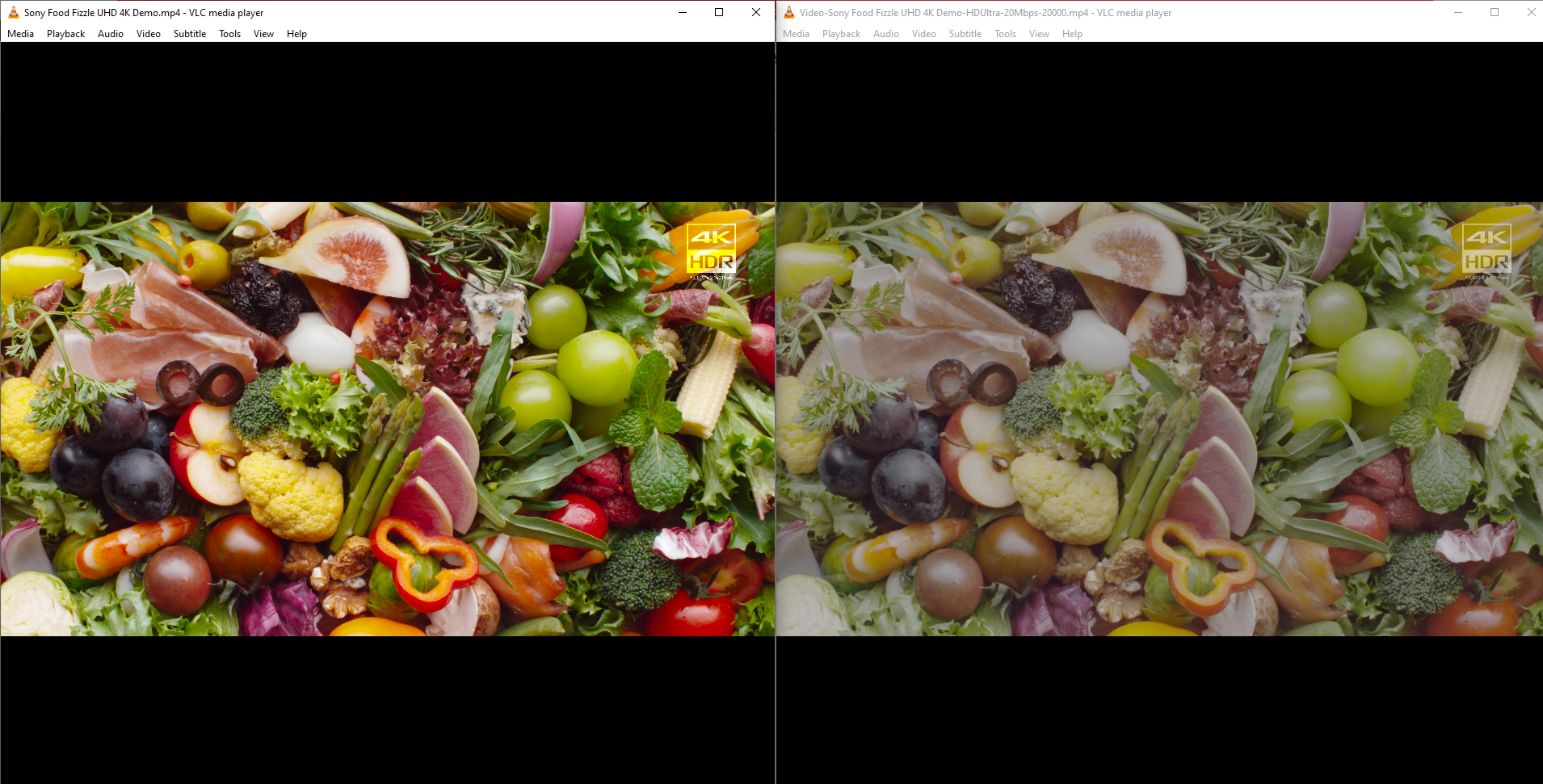

To see the new transform applied to videos of extremely high quality, I tried creating a 1080p encode of the popular 'LG Chess Demo' video which is 4K 60fps and with HDR as HDR10 by directly uploading the video to the "videodata" folder/container created in Project Condenser using Azure Storage Explorer. The solution I created in that project is not restricted to requiring video from mobile phones, and can automatically trigger the sequence of events to encode new videos upon uploading to the the folder. From my findings, this particular video in its original form could not be converted from Azure Media Services (More on this shortly). Then I tried another 4K 60 fps sample video with HDR10 called 'Sony Food Sizzle' and the results are very astonishing while not surprising:

As you can observe above the loss of colour especially is drastic. The loss of quality however going from 4K HDR to 1080p SDR is circumvented with a reasonably high bitrate of 20Mbps in this case. This kind of compression and loss is what happens when content is uploaded to most social media platforms, but on a more aggressive scale for the platform to save on costs and to make the uploaded content more immediately available on the platform for the user. So instead of using 1080p at 20Mbps to retain more detail, your high quality, high bitrate 4K media can get compressed to say 1080p at 5Mbps (or worse, 720p in some cases) which is a tragedy in my opinion as it heavily undermines the capabilities of high quality modern cameras. Here are the 2 video files (to be downloaded, do not stream) for comparison, an HDR display is not entirely necessary but is the best to carry out the comparison. The colour difference will still be clearly visible even on regular SDR displays:

4K HDR10 Sample (726MB)

1080p SDR sample output from Azure Media Services (196MB)

But as with the original intention and goal of the project (which is to make high quality video content more viewable on older media players and displays through encoding in the cloud to lower resolutions and to make it archivable), such sacrifices on quality maybe necessary in short term.

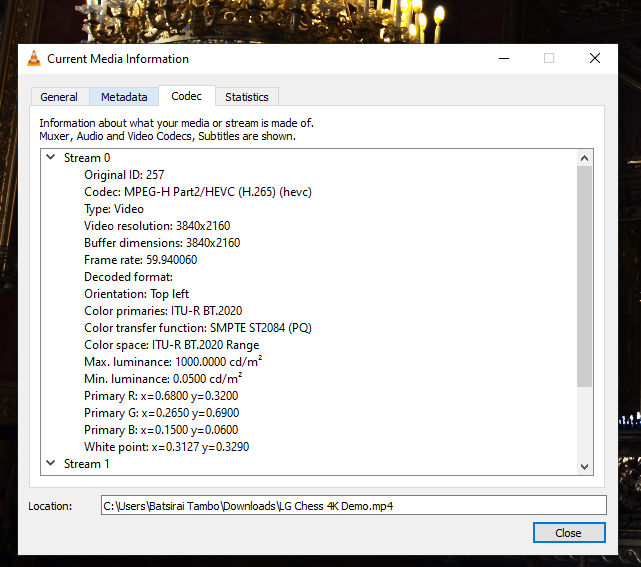

Now what about the LG Chess demo video that failed?. I discovered that Azure Media Services appears to support 4K H265 videos with the HEVC tag of (hvc1) rather than a tag of (hevc), at least it is my working theory at the time of writing. A 4K HDR video with the below metadata will be rejected as unsupported input media:

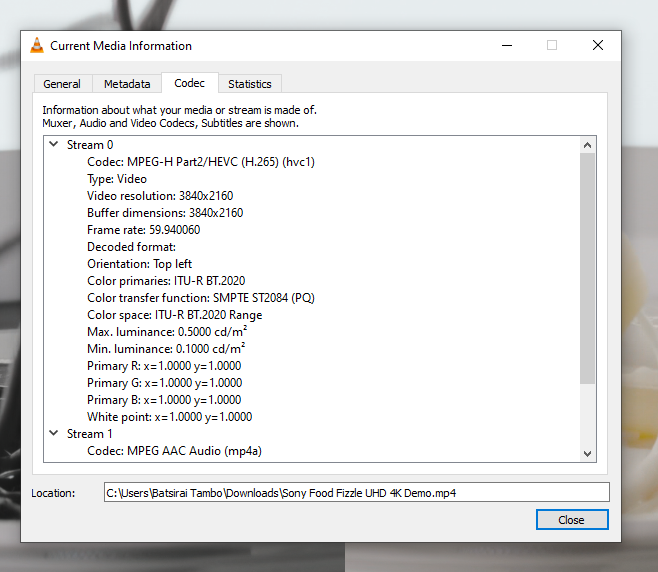

The Food Sizzle sample video has slightly different metadata(with hvc1 tag), and works well in Azure Media Services as input media for encoding to other formats:

NB - In Azure, the encode of the 1min 22s long Food Sizzle sample 4K video took 4mins to complete which isn't too long of a wait considering that the cloud encoder in Azure had initially put the video on a 'Scheduled' status for about 2mins before actually encoding, therefore a real encode time of about 2-3mins.

No limits, tell us about 8K video!!

It turns out that Azure Media Services is good to encode an output 1080p file from an 8K source. Using the same transform at 1080p 20MBps, the results are excellent. The encode took 12mins for a 5min long 8K video to 1080p. I did use a relatively low bitrate non HDR 8K video clocking in at just 906MB in size and had I used an even higher fidelity video, this would have taken longer to encode to 1080p. Here are the 2 files for comparison again:

8K sample video download (good luck playing this natively on your machine!!, there is no audio but the video is fully intact and valid for playback - credit; Armadas on YouTube) (906MB)

1080p output video download (there is no audio), down from 8K with Azure Media Services (776MB - this size is rather inflated for the output resolution because the source 8K media runs at around 20Mbps already, so in this case we would want to perhaps use a lower bitrate for a new and separate Azure Media Services transform)

Wrap Up

Encoding a 4K HDR output seems to be restricted to Live Event Streaming only when using a passthrough feed direct from the camera (more here). Regular 4K encoding is available today in Azure Media Services and will be useful in the future where 8k video is the new higher standard and 4K becomes the normal standard used.

Finally, while I own a OnePlus 7 Pro (on Android 11) which is capable of 4K 60fps video recording, this was not used in the original project because Xamarin camera library automatically forced the OnePlus camera video viewfinder to 1080p 30 frames per second video, with no access to change this to 4K 60fps in Settings. On the other hand, using the exact mobile platform code, my HTC 10 (on Android 8.0) was able to get set to 4K 30fps automatically and had access to the Settings to drop to 1080p 30fps if needed. I'm still investigating what might be happening with Android 11 or the Xamarin camera library, but I may get better flexibility with another Android camera library or NET MAUI in the future. I have also not done additional test uploads with videos directly from the OnePlus 7 Pro into Azure blob storage, because it records 4K 60fps at roughly 120Mbps which are very large files and these add up quite a lot on ingress and storage costs in Azure to demonstrate for this post. I might update this post should I do those in the future.